For years, data teams have faced a fundamental conflict: use a flexible data lake for unstructured data and machine learning, or a reliable data warehouse for BI and SQL analytics. Operating both creates separate data silos, leading to data duplication, high maintenance costs, and complex pipelines.

This is the core problem the Databricks Lakehouse architecture addresses. So, what is Databricks Lakehouse? It’s an open architecture that merges the flexibility and scale of data lakes with the performance and reliability of data warehouses into one platform.

In this article, I provide a factual explanation of the Lakehouse, its components, and its architecture. It is written for data engineers, architects, and business leaders who need to evaluate and understand this platform for their Data & AI platform strategies.

What Is Databricks Lakehouse?

If you’re wondering what Databricks Lakehouse is, it’s the next evolution in data platforms. It’s an open architecture that unifies your data, analytics, and AI, built on a data lake foundation. This hybrid approach eliminates the rigid silos of the past, where data for analytics (BI) was kept separate from data for AI (machine learning).

The core concept is to deliver the reliability, performance, and ACID transactions (Atomicity, Consistency, Isolation, Durability) of a traditional data warehouse directly on the low-cost, flexible object storage of your data lake.

Unlike traditional systems, the Lakehouse architecture:

- Avoids data silos by storing all your data in one place.

- Supports all data types, including structured, semi-structured, and unstructured data.

- Serves all workloads, from SQL analytics and BI to data science and real-time streaming.

This unified approach, built on open-source technologies, ensures that your entire organization, from data analysts to machine learning engineers, can work with the same consistent, up-to-date data.

| Feature / Platform | Data Lake | Data Warehouse | Databricks Lakehouse |

|---|---|---|---|

| Data Types Supported | Structured & unstructured | Structured only | All (structured, semi-structured, unstructured) |

| ACID Transactions | ❌ | ✅ | ✅ |

| Real-Time Analytics | Limited | Limited | ✅ |

| Cost Efficiency | ✅ | ❌ | ✅ |

| AI & ML Support | ✅ | Limited | ✅ |

| Governance & Security | Partial | Strong | ✅ (Unity Catalog) |

Key Components of Databricks Lakehouse Architecture

The Databricks Lakehouse is not a single product but an architecture built from several powerful, integrated components.

Delta Lake

Delta Lake is the open-source storage layer that forms the foundation of the Lakehouse. It extends data lakes (like Amazon S3 or Google Cloud Storage) with critical features:

- ACID Transactions: Brings reliability and data integrity to your data lake.

- Schema Enforcement: Prevents bad data from corrupting your data pipelines.

- Time Travel: Allows you to access previous versions of your data for audits, rollbacks, or reproducing experiments.

- Unified Batch and Streaming: A table in Delta Lake is both a batch table and a streaming source, simplifying data pipelines.

Medallion Architecture

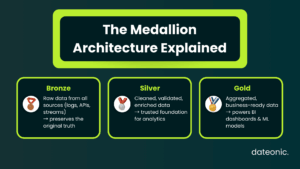

This is the industry-standard design pattern for organizing data within the Lakehouse. It structures data into three distinct layers of quality, ensuring a „multi-hop” path from raw data to business-ready insights.

- Bronze: Raw, ingested data from all sources. This layer preserves the „source truth.”

- Silver: Cleaned, validated, and merged data. This is where data is conformed and enriched.

- Gold: Business-level, aggregated data ready for BI and AI. These tables are optimized for analytics.

For a deep dive into this framework, read our Databricks Medallion Architecture: Essential Guide.

Unity Catalog

Unity Catalog is the unified governance solution for the Lakehouse. It provides a centralized metastore with:

- Unified Governance: Manage all your data and AI assets (files, tables, ML models) in one place.

- Fine-Grained Security: Control access down to the row and column level using standard SQL.

- Automated Data Lineage: Automatically track data lineage across all queries and languages.

- Secure Data Sharing: Use Delta Sharing to securely share live data with other organizations without copying it.

Apache Spark and Photon Engine

The core processing power of Databricks comes from Apache Spark, the open-source engine for large-scale data processing. Databricks enhances this with the Photon Engine, a high-performance, vectorized query engine written in C++ that accelerates SQL and DataFrame workloads, delivering lightning-fast performance for BI and analytics.

Other Key Elements

- MLflow: An open-source platform for managing the end-to-end machine learning lifecycle, including experimentation, reproducibility, and deployment.

- Delta Live Tables (DLT): A declarative framework for building reliable and maintainable data pipelines, simplifying the implementation of the Medallion architecture.

For more information on how these components fit together, see our guide on Databricks Architecture Best Practices.

How Databricks Lakehouse Works

The workflow in a Databricks Lakehouse follows a logical progression, transforming raw data into high-value insights.

- Ingestion: Data from various sources (databases, streaming apps, logs) is landed in its raw format in the Bronze layer using tools like Auto Loader.

- Processing & Curation: Data flows through the Medallion architecture. It is cleaned and validated into Silver tables. Then, business logic is applied to create aggregated, business-ready Gold tables.

- Serving & Consumption: This single source of truth (the Gold tables) is then used to power all workloads:

- BI & SQL Analytics: Analysts use Databricks SQL to run high-performance queries on the same data.

- Data Science & ML: Data scientists use notebooks (in Python, SQL, R, or Scala) to explore data and train models, like those used in Generative AI.

- Governance: Unity Catalog provides a secure governance layer over this entire process, ensuring only the right people have access to the right data.

This entire architecture runs on a multi-cloud platform, giving you the flexibility to operate on AWS, Azure, or GCP.

Benefits and Use Cases

Adopting a Databricks Lakehouse architecture provides significant, measurable benefits.

Key Benefits

- Cost Savings: By using low-cost object storage, you get the performance of a data warehouse at the cost of a data lake.

- Improved Performance: The Photon engine provides exceptional query speeds for BI dashboards and SQL analytics.

- Unified Governance: Unity Catalog simplifies security and compliance, reducing operational risk.

- Faster AI Deployment: A unified platform for data and AI eliminates friction, allowing data science teams to build and deploy models faster.

- Open Standards: Built on open-source technologies like Delta Lake and Apache Spark, the Lakehouse helps you avoid vendor lock-in.

Databricks Lakehouse Benefits for AI and Real-Time Analytics

The true power of the Lakehouse is its ability to handle demanding, modern use cases that traditional systems can’t.

- Real-time Analytics: Process and analyze streaming data for applications like fraud detection or real-time logistics tracking, as seen in how Databricks reduces waste in logistics.

- Predictive Analytics: Build sophisticated ML models to forecast demand, predict customer churn, or perform predictive maintenance.

- Customer 360: Create a complete, unified view of your customers by combining batch and streaming data from all touchpoints.

- Generative AI: Use all your enterprise data as a foundation for building and training large language models (LLMs) securely.

Conclusion

So, what is Databricks Lakehouse? It’s the end of the false choice between data lakes and data warehouses. It is a single, open, and unified platform that delivers on the promise of data and AI by providing a reliable, high-performance foundation for every data workload.

By combining the open-source power of Delta Lake with the advanced governance of Unity Catalog, the Databricks Lakehouse is the definitive architecture for any company serious about building a data-driven future.

Contact Dateonic today to build your Lakehouse solution.