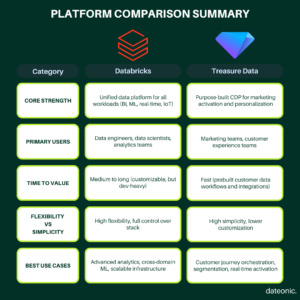

Databricks and Treasure Data represent two very different approaches to enterprise data management. One focuses on deep technical flexibility and unified data workloads; the other prioritizes rapid, marketer-led customer activation.

This comparison helps you decide which path best suits your organization’s goals in 2025.

Strategic Overview

As data strategy becomes core to every business function—from operations to marketing—organizations must decide whether to invest in an extensible platform like Databricks that grows with their needs, or opt for a turnkey solution like Treasure Data that favors speed over scalability.

- Databricks powers enterprise-scale analytics, machine learning, and real-time pipelines across all types of data.

- Treasure Data offers a more opinionated approach tailored to marketing activation—but with significant constraints on flexibility, customization, and long-term cost efficiency.

This article breaks down the strengths and trade-offs of each platform—so you can confidently align your data platform strategy with your organization’s business model, technical maturity, and go-to-market priorities.

Databricks Lakehouse vs Treasure CDP

Databricks: Built for Scale, Performance, and AI

Databricks pioneered the lakehouse architecture, unifying data lakes and warehouses into a single platform. Its architecture supports batch and streaming workloads, machine learning, BI, and beyond—while maintaining governance and performance at scale.

Key components include:

- Delta Lake: Enables ACID-compliant transactions, schema enforcement, and time travel.

- Photon Engine: Provides lightning-fast SQL performance for BI and analytics.

- Unity Catalog: Delivers cross-workload data governance and role-based access control.

- Elastic Compute: Allows workloads to auto-scale for cost and performance optimization.

- Native AI/ML Support: Integrates with MLflow, Hugging Face, and other libraries.

Treasure Data: Simplified but Limited CDP

Treasure Data is positioned as a marketer-friendly CDP—but its simplified approach introduces technical and operational trade-offs:

- Basic SQL Support Only: Treasure Data supports a limited SQL dialect and lacks modern capabilities such as MERGE INTO for incremental loads, which limits automation and upsert workflows.

- No Fine-Grained Optimization: Users have limited ability to tune performance or optimize workloads. Query and data pipeline efficiency cannot be easily improved, making scaling difficult and costly.

- Rigid Architecture: It’s not designed for complex, multi-domain data strategies. Custom logic, transformations, and orchestration require awkward workarounds or external tooling.

According to the CDP Institute, Customer Data Platforms are designed as marketer-managed systems with user-friendly interfaces and pre-built functionalities tailored for marketing needs, whereas platforms like Databricks offer greater technical flexibility suited for data engineering and data science workloads.

Cost Model

Databricks: Elastic & Transparent Pricing

Databricks pricing is usage-based and scales according to compute and storage utilization. Enterprises can optimize cost by fine-tuning clusters, applying spot pricing, and using serverless compute where appropriate.

- Granular visibility into job performance and cost

- Pay only for what you use

- Can reduce compute costs significantly via optimization

Treasure Data: Credit-Based and Rigid

Treasure Data uses a credit-based model that can be hard to predict or control. Costs scale rapidly with data volume and profile activity, often leading to surprises as usage grows.

- No clear path to optimization – you spend more as you grow

- Unpredictable scaling – no elasticity, flat pricing tiers based on credits

- Difficult to forecast ROI beyond initial proof of concept

| Factor | Databricks | Treasure Data |

|---|---|---|

| Implementation Effort | High – engineering required | Low – out-of-the-box |

| Customization Potential | Very high | Moderate to low |

| Optimization & Scaling | Fully controllable | Limited to none |

| AI Capabilities | Advanced & flexible | Minimal |

| SQL & Transformations | Full ANSI SQL + Spark | Basic SQL only |

| TCO Predictability | Variable but controllable | Predictable but high as you scale |

AI & Machine Learning

Databricks: Full AI Stack, Built-In and Open

Databricks supports everything from notebooks to production ML:

- Prebuilt integrations with TensorFlow, PyTorch, XGBoost, and open-source models

- AutoML capabilities for non-technical users

- Full support for real-time inferencing and model serving

- Native MLOps with MLflow for tracking, experimentation, and deployment

Treasure Data: Underdeveloped AI

Treasure Data’s AI features remain basic and limited:

- No robust ML development environment

- Limited native support for predictive modeling or personalization beyond templated rules

- Lacks versioning, experimentation tools, or native model management

- No ability to bring your own models easily

According to Gartner, purchasing a pre-built Customer Data Platform can be more cost-effective and may lead to faster deployment compared to building a custom solution.

IoT Data Management

Treasure Data for IoT

Treasure Data originated as an IoT analytics platform before expanding into the CDP space. This heritage gives it specialized capabilities for collecting and analyzing device data.

The platform excels at combining customer and product usage analytics to create a complete view of how customers interact with connected products.

Its pre-built connectors simplify integration with common IoT protocols and systems. This makes Treasure Data particularly valuable for organizations looking to analyze customer behavior in conjunction with product telemetry.

Databricks for IoT

Databricks provides a more technically flexible foundation for IoT analytics at scale.

The platform can efficiently store and process the high-volume, high-velocity data typical of IoT deployments. Its advanced analytics and machine learning capabilities enable sophisticated analysis of sensor data, including anomaly detection and predictive maintenance.

The native support for time-series analytics makes it well-suited for temporal analysis of IoT data. Delta Lake ensures reliable storage of continuous IoT data streams even in the face of failures or schema evolution.

When to Use Databricks Over Treasure Data

Databricks is the stronger choice in scenarios that demand greater technical flexibility, large-scale data processing, and advanced analytics:

- Enterprise-Scale Data Workloads

Organizations managing massive volumes of structured, semi-structured, and unstructured data across multiple domains will benefit from Databricks’ high-performance processing engine, scalable architecture, and support for diverse workloads—including streaming, batch, and ML.

- Advanced Machine Learning and AI Use Cases

For teams focused on predictive analytics, real-time decisioning, and model-driven personalization, Databricks offers robust support for notebooks, MLOps workflows, and integration with open-source ML libraries.

- Cross-Functional Data Platforms

Enterprises looking to build a unified data foundation that serves data engineering, data science, and business intelligence teams alike will find Databricks more suitable than a marketing-centric CDP like Treasure Data.

- Custom Analytics Requirements

When out-of-the-box marketing tools aren’t sufficient, and organizations need tailored segmentation, scoring models, or proprietary algorithms, Databricks’ flexibility enables fully customized solutions.

- Lakehouse-Centric Architecture Strategies

Companies already investing in a lakehouse or open table formats like Delta Lake can extend that foundation with Databricks, reducing data duplication and simplifying governance across use cases.

Can Databricks Replace a Customer Data Platform?

While Databricks can theoretically replace a CDP, this requires significant custom development effort:

Databricks provides the technical foundation necessary to build CDP capabilities, including data collection, identity resolution, segmentation, and activation.

However, implementing these capabilities requires substantial investment in custom development and integration.

Organizations typically need 6-12 months to build equivalent CDP functionality on Databricks, compared to 2-3 months for implementing a purpose-built solution like Treasure Data.

Building CDP capabilities on Databricks also requires specialized expertise in identity resolution algorithms, marketing technology integration, and real-time activation that many organizations lack internally.

Custom-built solutions additionally require ongoing maintenance as marketing technologies evolve.

For organizations with unique requirements and strong technical resources, building CDP capabilities on Databricks can provide greater customization and control.

However, most organizations find that purpose-built CDPs deliver faster time-to-value for standard marketing use cases.

Cost Comparison

Understanding the total cost of ownership requires considering implementation, annual licensing, and operational factors:

| Category | Databricks (Custom CDP Build) | Treasure Data (Purpose-Built CDP) |

|---|---|---|

| Implementation Cost | $100,000 – $300,000 (engineering-heavy setup) | $50,000 – $150,000 (prebuilt CDP deployment) |

| Annual Operating Cost | $150,000 – $400,000 (compute + platform fees) | $100,000 – $500,000 (based on profile volume) |

| Team Size Needed (FTEs) | 1–3 FTEs (engineering, ML, platform ops) | 0.5–1 FTE (marketing ops, config) |

| Time to Positive ROI | 9–18 months (depending on scope + maturity) | 4–12 months (faster time-to-value) |

| Cost Predictability | Variable – depends on workload + usage patterns | Predictable – based on usage tiers |

Key Takeaways

- Databricks excels at large-scale, cross-functional analytics, offering unmatched flexibility for organizations with strong data engineering and data science capabilities. Its lakehouse architecture is ideal for enterprises seeking unified storage, compute, and governance.

- Treasure Data is purpose-built for marketing use cases, providing faster time-to-value, intuitive tools for non-technical users, and deep integrations with the marketing technology ecosystem.

- Time, resources, and organizational priorities should guide your choice. If your focus is on unified customer experiences and rapid deployment, Treasure Data may be a better fit. If you need full control over data infrastructure and advanced AI/ML capabilities, Databricks is the stronger contender.

- Hybrid adoption is increasingly common—using Databricks for core data science and engineering, while leveraging Treasure Data for customer data orchestration and activation.

To identify the best-fit platform strategy for your business, contact our data platform experts for a tailored consultation. We’ll help you align your technology stack with your business goals and maximize return on investment.