As artificial intelligence reshapes the business landscape, a critical decision looms for leaders: which technology to adopt? When it comes to the powerful Large Language Models (LLMs), a clear trend has emerged, with a staggering 76% of companies choosing open-source options.

Let’s dive into why open-source LLMs are winning the enterprise race, from boosting your bottom line to securing your most valuable data.

Unlocking Cost Savings with Open-Source LLMs

One of the most compelling reasons for adopting open-source LLMs is the significant cost savings. Unlike proprietary models that require expensive recurring licenses, open-source alternatives are free to use. This is a game-changer for startups and SMEs, allowing them to leverage cutting-edge AI without a massive budget.

While you still need to account for infrastructure to run the models, eliminating licensing fees drastically cuts the total cost of ownership. As noted by industry experts at DataCamp, these financial benefits are a primary driver of adoption.

Leveraging platforms like Databricks is key to managing these operational costs, and for those looking to maximize every dollar, Dateonic offers expert guidance on optimizing clusters in Databricks for performance and cost.

Key Financial Wins with Open Source:

- Zero Licensing Fees: Use powerful models for free.

- Lower TCO: Reduce the total cost of your AI stack.

- Accessible Power: Empowers businesses of all sizes.

Enhanced Data Privacy and Security

In an age of constant data threats, security is non-negotiable. A major concern with proprietary LLMs is that sensitive company data must be sent to third-party providers. A survey by insideAI News confirmed this fear, revealing that over 75% of enterprises are wary of commercial LLMs due to data privacy risks.

Open-source LLMs solve this problem directly. By hosting models in-house or on your private cloud, you maintain absolute control. This principle of data sovereignty is crucial for protecting sensitive information and ensuring regulatory compliance.

Hosting your own LLM means:

- Your data stays yours: It never leaves your secure environment.

- You control access: Minimizing the risk of external breaches.

- You ensure compliance: Easily meet strict data protection standards like GDPR.

For businesses seeking the best platforms for managing important company data, Dateonic provides expert advice on implementing the most secure and efficient solutions.

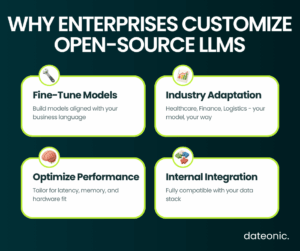

Unparalleled Flexibility and Customization

Off-the-shelf solutions rarely fit perfectly. Open-source LLMs offer the unique ability to be fine-tuned and customized for your specific business needs—a level of flexibility that proprietary models, with their restricted access, simply cannot match.

With an open-source model, your data science team can:

- Fine-tune on company data for hyper-relevant results.

- Adapt for specialized industry tasks.

- Optimize for performance on your specific hardware.

This deep customization, as highlighted by VentureBeat, allows enterprises to build highly effective applications that drive real business value. To maximize the output of your models, it is crucial to employ the right performance techniques. Learn more about the top 5 Databricks performance techniques to supercharge your AI initiatives.

Transparency and Collaborative Development

Trust is built on transparency. With open-source LLMs, developers can inspect the source code to understand its architecture, identify potential biases, and verify its security. There are no „black boxes,” only clear, auditable technology.

This philosophy also fosters a vibrant global community that drives rapid progress. As IBM explains, this collaborative environment leads to shared innovation and a more robust ecosystem for everyone.

Benefits of Open Collaboration:

- Full Transparency: Inspect and modify the source code.

- Community Innovation: Benefit from global developer contributions.

- Rapid Improvements: Get faster bug fixes and new features.

Mitigating Vendor Lock-in Risks

Relying on a single proprietary vendor is a risky strategy. It leaves you vulnerable to their business decisions, from sudden price hikes to changes in service or even product discontinuation. This „vendor lock-in” can cripple your long-term AI strategy.

Open-source LLMs offer freedom and control. By building on open standards, you avoid dependency on any single company. As Hatchworks points out, this strategic independence is a key reason enterprises are embracing open-source to de-risk their AI investments.

Avoid the Risks of Vendor Lock-in:

- Sudden Price Hikes: Control your budget without surprises.

- Policy Changes: Don’t be subject to a vendor’s whims.

- Strategic Freedom: Switch models or providers as needed.

| Feature | Open-Source LLMs | Proprietary LLMs |

|---|---|---|

| Cost | Free to use | High licensing fees |

| Data Privacy | Full control (self-hosted) | Data sent to third party |

| Customization | Full model access | Limited or none |

| Transparency | Open codebase | Black-box systems |

| Vendor Lock-in | No | Yes |

| Deployment Options | Any cloud or on-prem | Restricted |

Conclusion

The trend is undeniable: the future of enterprise AI is open. The combined advantages of cost savings, data control, deep customization, transparency, and freedom from vendor lock-in make a powerful business case.

By embracing open-source AI, companies can reduce risk while unlocking unprecedented opportunities for innovation and growth.

Ready to harness the power of open-source LLMs for your business? Dateonic is your trusted partner for implementing and optimizing AI solutions on the Databricks platform.

Our team of experts can help you navigate the complexities of open-source AI, from selecting the right model to deploying and scaling it for maximum impact. Contact us today for a free consultation and discover how Dateonic can help you build your AI future.