The debate over Databricks vs Snowflake for enterprises is no longer just about raw performance or upfront pricing. The true cost and value are revealed in its Total Cost of Ownership (TCO), which includes:

- Direct costs

- Engineering overhead

- Operational expenses

- Return on Investment (ROI) from integrated AI and machine learning capabilities

This guide shifts the focus from a simple price/performance comparison to a holistic analysis of TCO. We will compare core architectures, break down pricing models, explore hidden engineering costs, and evaluate the value of integrated AI/ML features to provide a comprehensive perspective for your enterprise’s future.

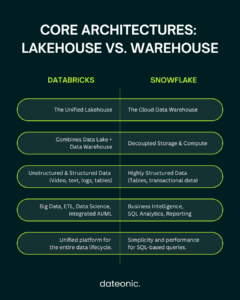

Understanding the Platforms – Core Architectures

At the heart of the Databricks vs. Snowflake debate are their fundamentally different architectures. Understanding these differences is the first step in appreciating their long-term cost implications.

Databricks is built on the „lakehouse” model, a modern paradigm that combines the best of data lakes and data warehouses. Powered by Apache Spark and Delta Lake, it provides a single, unified platform for all data, analytics, and AI workloads.

This design allows it to expertly handle both structured and unstructured data, making it ideal for big data processing and complex data science.

Snowflake, on the other hand, is a premier cloud-native data warehouse. Its unique architecture separates storage and compute, offering impressive scalability and performance for structured data querying.

This SQL-centric approach has made it a favorite for business intelligence (BI) and data warehousing use cases due to its accessibility and ease of use.

These distinct philosophies create key architectural differences for enterprises:

- Data Handling: Databricks excels with the unstructured and semi-structured data crucial for modern AI/ML, while Snowflake is highly optimized for the structured data used in traditional BI and reporting.

- Ecosystem: Databricks offers a more integrated, end-to-end platform for the entire data lifecycle, whereas Snowflake often requires integration with other tools for advanced ML and AI.

- Flexibility: The Databricks lakehouse offers greater flexibility in data formats and processing engines, while Snowflake’s managed environment provides simplicity and ease of use.

These choices directly impact long-term costs, from data processing efficiency to the need for supplementary tools and specialized talent.

Direct Costs – Pricing Models Breakdown

While TCO goes far beyond direct costs, the pricing models of Databricks and Snowflake are a significant factor in any enterprise budget.

Databricks’ pricing is based on Databricks Units (DBUs), which are consumed for compute resources, in addition to the underlying cloud provider fees. This model offers a high degree of tunability.

For example, enterprises can:

- Leverage spot instances for significant savings on non-critical workloads.

- Optimize cluster configurations to match specific job requirements.

- Benefit from performance advantages that lower overall compute time, with some benchmarks claiming up to 9x lower ETL costs compared to Snowflake.

Snowflake’s pricing is based on credits used for compute and separate charges for storage. This model is known for its predictability and ease of understanding, which is a major benefit for budgeting. However, it can be less tunable for cost optimization, especially for bursty or unpredictable workloads.

For a deeper dive into optimizing your Databricks costs, check out our guide on Optimizing Clusters in Databricks.

Engineering and Operational Costs – The Hidden Expenses

The „sticker price” of a data platform is only one part of the TCO equation. Engineering and operational costs, often hidden, can significantly impact your budget and time-to-value.

Databricks, with its foundation in Apache Spark, requires a higher level of technical expertise, which can mean a steeper learning curve. This can increase hiring and training costs for specialized talent. However, its unified platform can ultimately reduce operational overhead by consolidating the tools needed for data engineering, data science, and MLOps into a single environment.

In contrast, Snowflake’s SQL-native interface is incredibly accessible to a broad range of data analysts and engineers, reducing training time and accelerating time-to-value for BI projects. The trade-off is that complex data science workloads may require introducing additional tools, which can create data silos and increase operational complexity.

Key Impacts on Enterprise TCO:

- Talent & Training: Consider the cost of hiring specialized Spark developers versus the broader availability of SQL experts.

- Time-to-Value: Snowflake often delivers quicker wins for BI, while Databricks can accelerate the entire end-to-end AI lifecycle.

- Operational Overhead: Compare the costs of managing a multi-tool stack versus the efficiencies of a unified platform.

Partnering with a Databricks expert like Dateonic can help mitigate these engineering challenges. Learn how to Choose the Best Databricks Consultancy Partner for Your Business.

The Value of Integrated AI/ML Features

In the age of AI, the ability to seamlessly integrate machine learning into your data workflows is a competitive necessity. This is where the TCO discussion shifts from pure cost to tangible value.

Databricks was built with AI and ML in mind. Its lakehouse architecture and integrated tools like MLflow and AutoML provide a robust, end-to-end platform for the entire machine learning lifecycle. This tight integration accelerates the development and deployment of AI models, enabling enterprises to derive value from their data faster without needing costly add-on tools.

Snowflake is also expanding its AI/ML capabilities with features like Cortex AI. However, its core architecture remains more focused on data warehousing. While building AI applications on Snowflake is possible, it often requires more custom integration and reliance on third-party tools compared to Databricks’ native capabilities.

Here is an illustrative image of an integrated data and AI platform, showcasing the seamless flow from data ingestion to ML model deployment.

This integration directly impacts TCO by:

- Reducing Tooling Costs: Eliminating the need for separate MLOps and data science tools.

- Accelerating Time-to-Insight: Unifying the data and AI lifecycle increases the ROI of data projects.

- Future-Proofing Your Stack: A platform with native AI/ML capabilities is a better long-term investment.

Explore the Top 5 Enterprise AI Platforms for 2025 to understand the broader landscape.

Calculating Total Cost of Ownership (TCO) for Enterprises

To truly understand the Databricks TCO versus Snowflake’s, you need a comprehensive formula:

TCO = Direct Costs + Engineering/Operational Costs – ROI from AI/ML

This table provides a high-level comparison to frame your analysis:

| Aspect | Databricks | Snowflake |

|---|---|---|

| Direct Compute Costs | Lower for ETL (up to 9x), tunable. | Predictable, higher for complex. |

| Engineering Investment | Higher initial, scalable. | Lower, SQL-focused. |

| AI/ML Integration Value | High ROI, built-in tools. | Add-on dependent. |

| Overall TCO for AI-Heavy Enterprises | Potentially 20-30% lower long-term. | Better for BI-only. |

Your ideal platform depends on your primary use case. For BI-focused enterprises where traditional reporting is key, Snowflake may offer a more straightforward TCO.

However, for AI-driven enterprises building complex models and leveraging unstructured data, Databricks’ integrated value proposition can lead to a significantly lower TCO over time.

For a more detailed platform comparison, see our Databricks vs Snowflake Comparison article.

Conclusion and Recommendations

When choosing between Databricks vs Snowflake for enterprises, the best choice depends on your strategic priorities. If your focus is primarily on business intelligence and you have a strong SQL-based team, Snowflake offers a simple, predictable, and powerful solution.

However, for enterprises serious about leveraging AI and machine learning for a competitive advantage, the TCO argument leans in favor of Databricks. While the initial engineering investment may be higher, the long-term value from its integrated AI/ML capabilities and potential for lower ETL costs makes it a compelling choice for future-focused CTOs.

To unlock the full potential of your data and optimize your Databricks TCO, partner with an expert. Visit dateonic for a free consultation and let us help you build an AI-ready data platform that delivers real business value.